Projected Nesterov's Proximal-Gradient Algorithms for Sparse Signal Reconstruction with a Convex Constraint

Renliang Gu and Aleksandar Dogandžić

Summary

We propose a method to solve the following optimization problem

\[ \begin{equation} \label{eq:f} \text{minimize}\qquad f(\bx)=\cL(\bx) +u r(\bx) \end{equation} \]

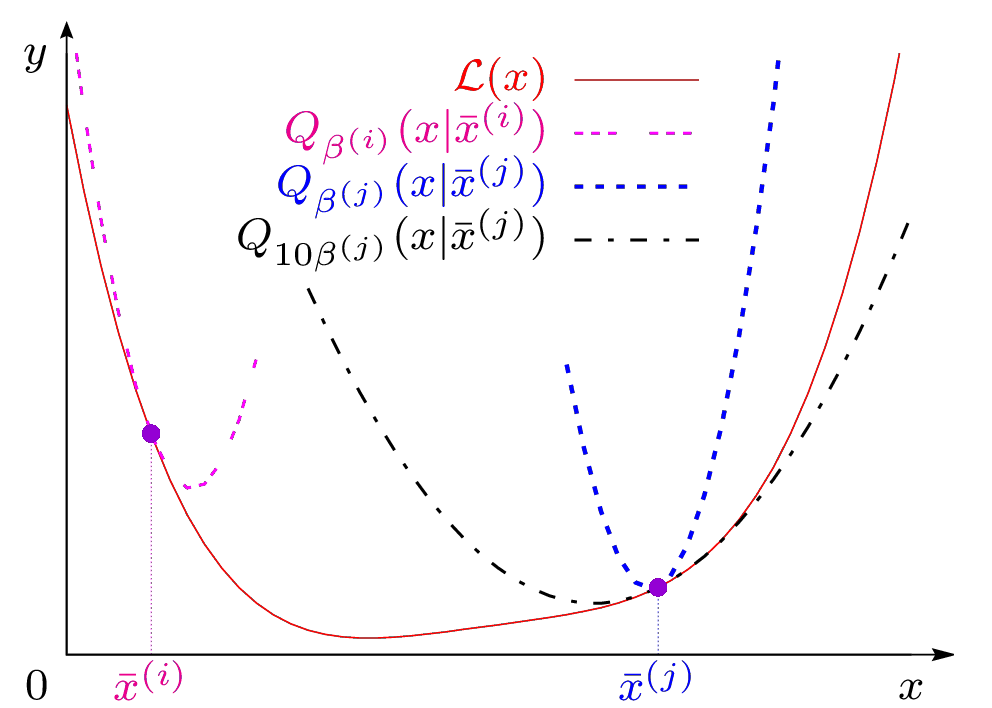

with respect to the signal \(\bx\), where \(\cL(\bx)\) is a convex differentiable data-fidelity (NLL) term, \(u>0\) is a scalar tuning constant that quantifies the weight of the convex regularization term \(r(\bx)\) that imposes signal sparsity and the convex-set constraint:

\[ \begin{equation} \label{eq:r} r(\bx)=\norm{\Psi^H\bx}_1 + \mathbb{I}_C(\bx) \end{equation} \]

where \(C\) is a closed convex set and \(\Psi^H \bx\) is a signal transform-coefficient vector with most elements having negligible magnitudes. Real-valued \(\Psi \in \mathbb{R}^{p\times p’}\) can accommodate discrete wavelet transform (DWT) or gradient-map sparsity with anisotropic total-variation (TV) sparsifying transform (with \(\Psi=[\Psi_\text{v}\,\,\Psi_\text{h}]\)); a complex-valued \(\Psi = \Psi_\text{v} + j \Psi_\text{h} \in \mathbb{C}^{p\times p’}\) can accommodate gradient-map sparsity and the 2D isotropic TV sparsifying transform; here \(\Psi_\text{v}, \Psi_\text{h} \in \mathbb{R}^{p\times p’}\) are the vertical and horizontal difference matrices.

This work is supported by the National Science Foundation under Grant CCF-1421480.

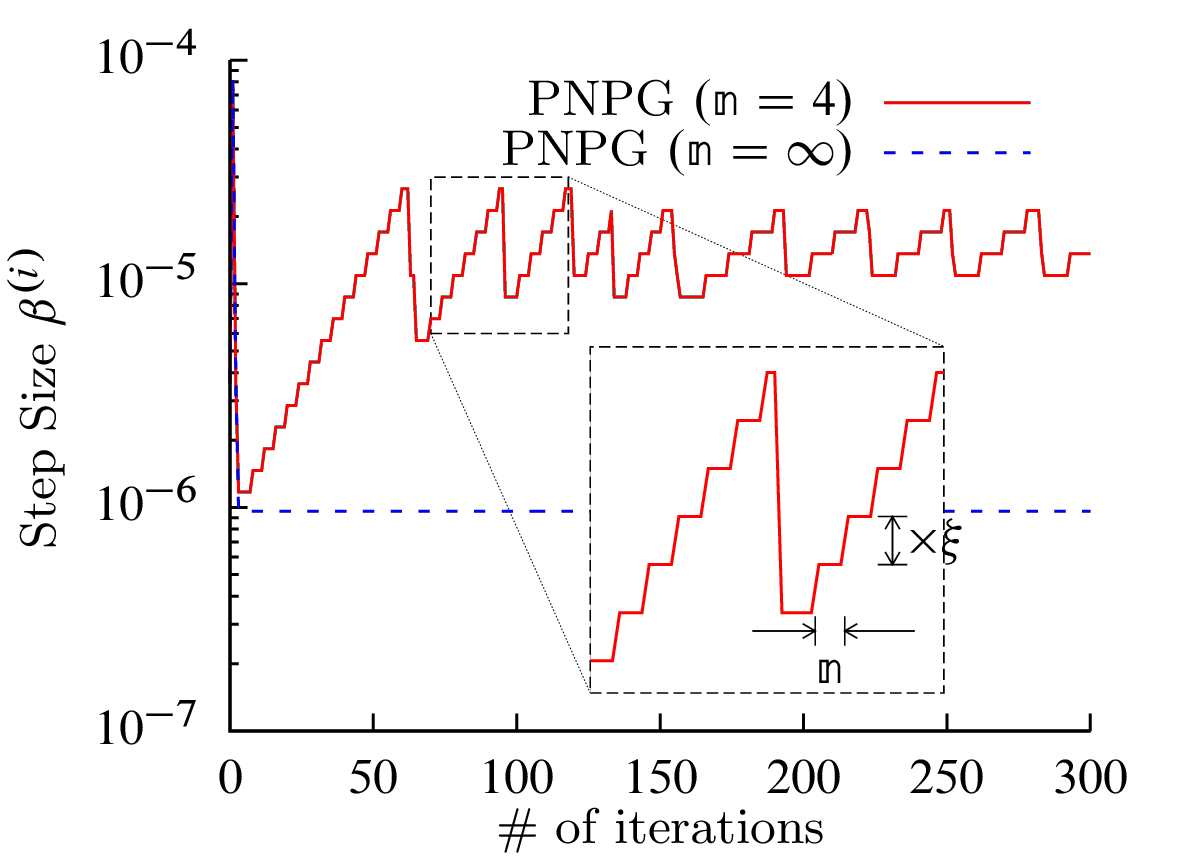

We proposed to use an adaptive step size strategy and , inexactness of the iterative proximal mapping, and the convex-set constraint.

|

|

Download

Clone or press “Download ZIP” button in the right panel of PNPG's GitHub page.

Reference:

R. Gu and A. Dogandžić, “Projected Nesterov’s proximal-gradient algorithm for sparse signal recovery,” IEEE Trans. Signal Process., vol. 65, no. 13, pp. 3510–3525, 2017. [BibTex] [DOI] [PDF]

@STRING{IEEE_J_SP = "{IEEE} Trans. Signal Process."}

@article{gd16convexsub,

Author = {Renliang Gu and Aleksandar Dogand\v{z}i\'c},

Title = {Projected {N}esterov's Proximal-Gradient Algorithm for Sparse Signal

Recovery},

Journal = IEEE_J_SP,

Volume = 65,

Number=13,

pages={3510-3525},

doi={10.1109/TSP.2017.2691661},

Year = 2017

}

R. Gu and A. Dogandžić, (May. 2016). Projected Nesterov's Proximal-Gradient Algorithm for Sparse Signal Reconstruction with a Convex Constraint. arXiv: 1502.02613 [stat.CO].

R. Gu and A. Dogandžić, “Projected Nesterov’s proximal-gradient signal recovery from compressive Poisson measurements”, in Proc. Asilomar Conf. Signals, Syst. Comput., Pacific Grove, CA, Nov. 2015, pp. 1490–1495. [DOI] [BibTeX] [PDF]

@string{asilomar = {{Proc. Asilomar Conf. Signals, Syst. Comput.}}}

@inproceedings{gdasil15,

Address = {Pacific Grove, CA},

Author = {Renliang Gu and Aleksandar Dogand\v{z}i\'c},

Booktitle = Asilomar,

Month = nov,

Title = {Projected {N}esterov's Proximal-Gradient Signal Recovery from

Compressive {P}oisson Measurements},

Year = {2015},

pages={1490-1495}

}

Our code has been used by

John P. Dumas, Muhammad A. Lodhi, Waheed U. Bajwa, and Mark C. Pierce, “Computational imaging with a highly parallel image-plane-coded architecture: challenges and solutions,” Opt. Express, 24, 6145-6155 (2016).